Table of Contents

- Introduction

Overview of Object Detection and Tracking

Introduction to YOLOv8 and DeepSORT

2. Project Setup

Cloning the Repository

Setting Up the Development Environment

3. Implementation Steps

Downloading and Organizing Required Files

Running Object Detection with YOLOv8

4. Understanding the Code

Overview of the Main Script

Detailed Explanation of Key Functions

5. Results and Output

Interpreting the Output Videos

Speed Estimation and Vehicle Counting

6. Extending the Project

Customizing the Detection Model

Adding New Features

7. Conclusion

Summary of the Project

Potential Applications

1. Introduction

Object detection and tracking are crucial components in modern computer vision applications, used in everything from autonomous vehicles to surveillance systems. In this blog, we’ll delve into the implementation of object detection, tracking, and speed estimation using YOLOv8 (You Only Look Once version 8) and DeepSORT (Simple Online and Realtime Tracking with a Deep Association Metric).

YOLOv8 is one of the latest iterations of the YOLO family, known for its efficiency and accuracy in detecting objects in images and videos. DeepSORT is an advanced tracking algorithm that enhances SORT (Simple Online and Realtime Tracking) by adding a deep learning-based feature extractor to improve object tracking accuracy, especially in challenging scenarios.

2. Project Setup

Before we dive into the code, let’s set up the project environment.

Cloning the Repository

First, clone the GitHub repository that contains the necessary code:

git clone https://github.com/Gayathri-Selvaganapathi/vehicle_tracking_counting.git

cd vehicle_tracking_counting

This repository includes scripts for object detection, tracking, and speed estimation, along with pre-trained models and sample data.

Setting Up the Development Environment

It’s essential to create a clean Python environment to avoid dependency conflicts. You can do this using virtualenv or conda:

# Using conda

conda create -n env_tracking python=3.8

Install the required dependencies by running:

pip install -r requirements.txt

3. Implementation Steps

Now that we have our environment set up, we can proceed with the implementation.

Downloading and Organizing Required Files

The project requires some additional files that aren’t included in the GitHub repository, such as the DeepSORT model files and a sample video for testing.

Download the DeepSORT files from the provided Google Drive link.

Unzip the downloaded files and place them in the appropriate directories as outlined in the project README.

For example, the DeepSORT files should be placed in the yolov8-deepsort/deep_sort directory, and the sample video should be in yolov8-deepsort/data.

Running Object Detection with YOLOv8

With everything set up, you can now run the object detection and tracking script. Here’s how you can do it:

python detect.py --source data/sample_video.mp4 --yolo-model yolov8 --deep-sort deep_sort_pytorch --output runs/detect

This command processes the sample_video.mp4 file, detects objects using the YOLOv8 model, tracks them with DeepSORT, and saves the output video in the runs/detect directory.

4. Understanding the Code

Let’s break down the main parts of the code to understand how it works.

Overview of the Main Script

The primary script, detect.py, orchestrates the entire detection and tracking process. Here's a high-level view of what the script does:

Load the YOLOv8 model: This model is used for detecting objects in each frame.

Initialize the DeepSORT tracker: This tracker assigns unique IDs to objects and tracks them across frames.

Process the video frame by frame: For each frame, the script detects objects, tracks them, and then draws bounding boxes and labels around them.

Output the processed video: The final video is saved with all the detected and tracked objects, along with their speeds if applicable.

Detailed Explanation of Key Functions

Here are some critical functions in the script:

- Initialize Tracker

from deep_sort.deep_sort import DeepSort

def init_tracker():

return DeepSort("deep_sort/model.ckpt", use_cuda=True)

This function initializes the DeepSORT tracker, which will be used to track detected objects across frames.

Object Detection with YOLOv8

def detect_objects(frame, model):

results = model(frame)

return results.xyxy[0] # Returns bounding boxes and class labels

This function runs YOLOv8 on each frame of the video to detect objects. The function returns bounding boxes along with the class labels.

Drawing Bounding Boxes

def draw_boxes(frame, bbox, identities, names):

for i, box in enumerate(bbox):

x1, y1, x2, y2 = [int(i) for i in box]

id = int(identities[i]) if identities is not None else 0

label = f'{names[i]} {id}'

color = compute_color_for_labels(id)

cv2.rectangle(frame, (x1, y1), (x2, y2), color, 2)

cv2.putText(frame, label, (x1, y1-10), cv2.FONT_HERSHEY_SIMPLEX, 0.75, color, 2)

return frame

This function takes the bounding boxes and identities of tracked objects, draws them on the frame, and annotates them with the object’s name and ID.

Speed Estimation

def estimate_speed(coord1, coord2, fps):

d_pixels = np.linalg.norm(np.array(coord2) - np.array(coord1))

d_meters = d_pixels / PIXELS_PER_METER

speed = d_meters * fps * 3.6 # Convert m/s to km/h

return speed

- This function estimates the speed of the tracked objects by calculating the distance they traveled between frames and converting it into km/h.

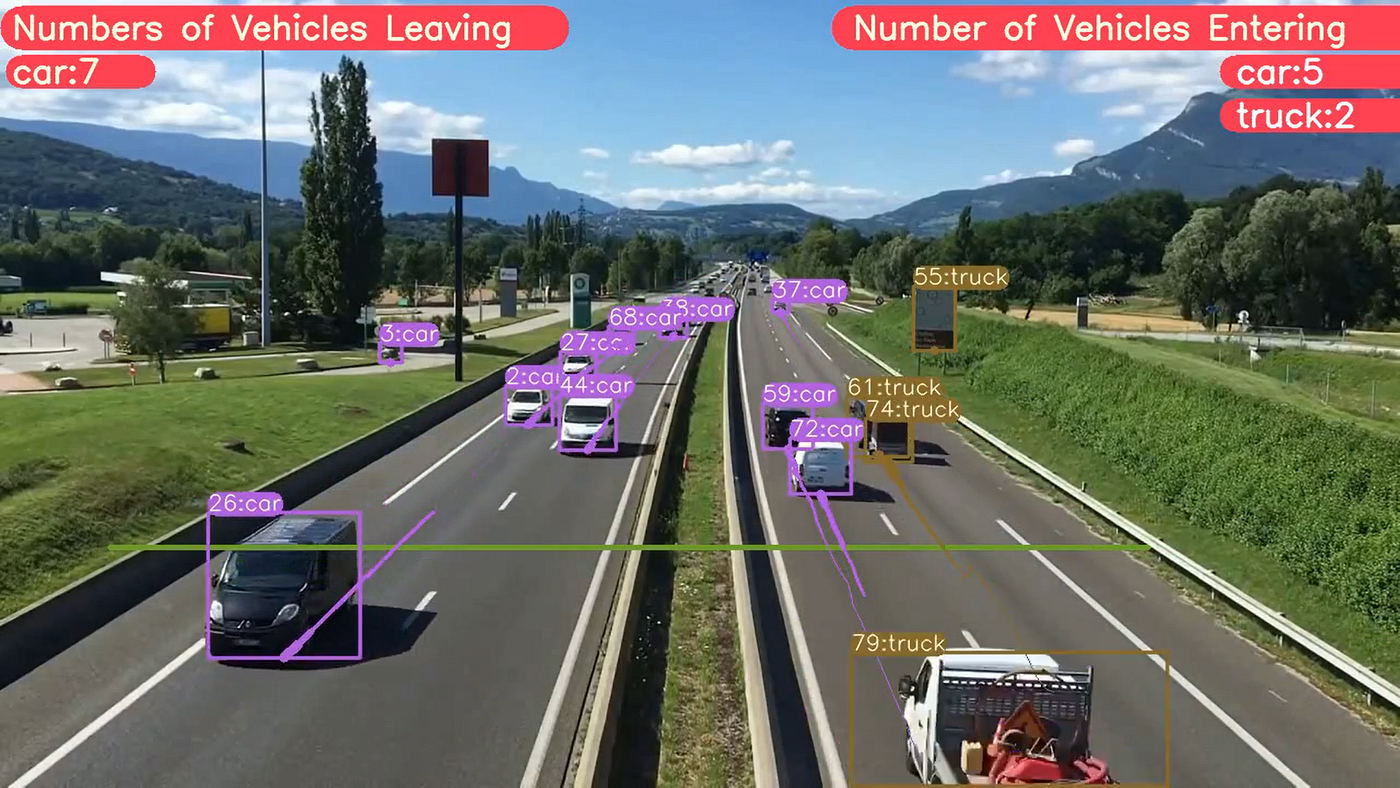

5. Results and Output

After running the script, you should see an output video where objects are detected, tracked, and labeled with their IDs. The video will also display the estimated speed of moving objects if enabled.

Interpreting the Output Videos

In the output video:

Bounding Boxes: Each detected object will have a bounding box drawn around it.

Object ID and Label: The label on the bounding box will show the object’s class and a unique ID assigned by the tracker.

Speed Estimation: If speed estimation is enabled, the speed of each moving object will be displayed.

Speed Estimation and Vehicle Counting

The script also includes features for counting vehicles and estimating their speeds. When a vehicle crosses a predefined line, it increments the vehicle count and estimates the speed using the Euclidean distance formula.

# Counting vehicles crossing a line

if is_crossing_line(bbox, line_position):

vehicle_count += 1

# Estimating speed

speed = estimate_speed(previous_coord, current_coord, fps)

6. Extending the Project

This project can serve as a foundation for more advanced applications. Here are a few ideas:

Customizing the Detection Model

You can fine-tune the YOLOv8 model to detect specific object classes relevant to your application. This might involve retraining the model on a custom dataset.

Adding New Features

Consider implementing real-time processing, multi-camera tracking, or even integrating with a web-based dashboard for live monitoring and control.

7. Conclusion

In this blog, we walked through the implementation of a sophisticated object detection and tracking system using YOLOv8 and DeepSORT. This system is capable of not only detecting and tracking multiple objects but also estimating their speeds and counting vehicles.

Potential Applications:

Traffic Monitoring: Detect and track vehicles, estimate their speed, and count them for traffic flow analysis.

Surveillance: Monitor people or objects in a secure environment, track their movements, and raise alerts for suspicious activity.

Autonomous Vehicles: Use this system as part of a larger autonomous driving stack to understand the environment and make driving decisions.

This tutorial demonstrates the power and flexibility of combining state-of-the-art deep learning models for real-world applications. Whether you’re working on a personal project or a professional system, these techniques can be adapted and expanded to meet your needs.